DeepSeek-V3 Pioneering the Future of Open-Source AI

Open-Source AI with Revolutionary Mixture-of-Experts Architecture

|

| DeepSeek V-3 |

DeepSeek-V3, released by the Chinese AI firm DeepSeek, is a groundbreaking open-source large language model (LLM) that features an impressive architecture and capabilities, setting new standards in the AI industry.

Overview and Architecture

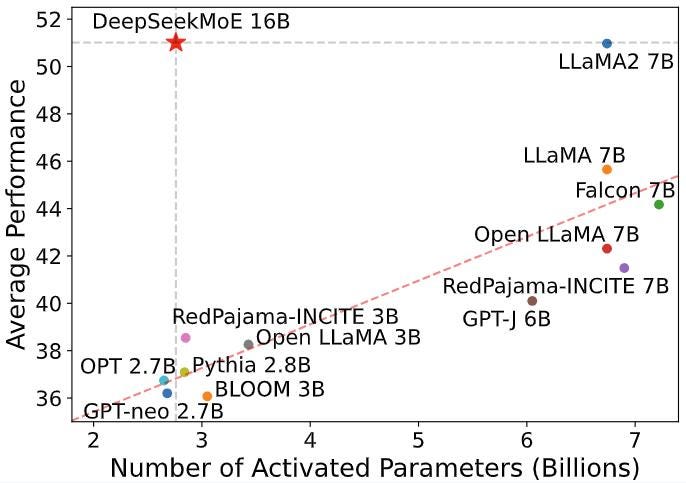

DeepSeek-V3 boasts 671 billion parameters, utilizing a Mixture-of-Experts (MoE) architecture.

|

| MoE |

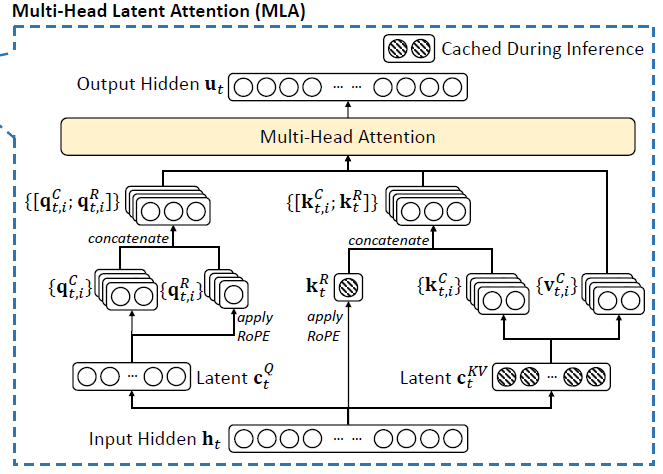

This innovative design activates only 37 billion parameters for each task, optimizing computational efficiency while maintaining high performance. The model employs Multi-head Latent Attention (MLA) and DeepSeekMoE architectures, enhancing its ability to process tasks quickly and accurately.

It was trained on 14.8 trillion tokens, utilizing techniques like supervised fine-tuning and reinforcement learning to ensure high-quality output.

Key Features and Functionalities

- Text-Based Model: Primarily designed for text processing, DeepSeek-V3 excels in coding, translation, and content generation.

- Efficiency: The MoE architecture allows for selective activation of parameters, reducing resource consumption and improving processing speed.

- Performance: Internal evaluations indicate that DeepSeek-V3 outperforms other models like Meta’s Llama 3.1 and Qwen 2.5 across various benchmarks, including Big-Bench High-Performance (BBH) and Massive Multitask Language Understanding (MMLU).

- Load Balancing: The model incorporates advanced load-balancing techniques to minimize performance degradation during operation.

Technology and Framework

DeepSeek-V3 is built on a robust technological foundation that includes:

- Mixture-of-Experts Architecture: This allows the model to dynamically select which parameters to activate based on the input task.

- Training Infrastructure: The model was trained over 2.788 million hours using Nvidia H800 GPUs, showcasing its resource-intensive training process.

- Open Source Availability: DeepSeek-V3 is hosted on Hugging Face, making it accessible for developers and researchers to utilize and modify.

Use Cases

DeepSeek-V3 can be applied across various domains:

- Education: Assisting in tutoring systems and generating educational content.

- Business: Automating customer support through chatbots and generating reports.

- Research: Aiding in data analysis and literature reviews by summarizing large volumes of text.

Availability

The model is available on Hugging Face under an open-source license, promoting accessibility for developers and enterprises looking to integrate advanced AI capabilities into their applications. This approach encourages innovation while allowing users to adapt the model for specific needs.

Future Prospects

As AI technology continues to evolve, DeepSeek-V3 represents a significant step towards cost-effective and efficient AI development. Its open-source nature could inspire further advancements in the field, potentially leading to more sophisticated models that incorporate multimodal capabilities in future iterations.

The focus on efficiency and performance positions DeepSeek-V3 as a strong contender against both open-source and proprietary models, paving the way for broader adoption in various industries.

DeepSeek-V3 exemplifies the potential of open-source AI models to challenge established players while providing accessible tools for developers worldwide. Its innovative architecture and robust performance metrics make it a noteworthy addition to the landscape of artificial intelligence.